Recognizing Ambient Noise and Constructing Virtual Buildings

emergenCITY researchers discussed their latest research at the internal DemoFair

emergenCITY researchers discussed their latest research at the internal DemoFair

Serious games, real-time code generation, classification of ambient noise: At the DemoFair on February 24, emergenCITY scientists presented the current status of their research results to each other. More than 50 participants - including PhD students, research assistants and PIs of all three participating universities - engaged in a lively exchange on a wide variety of software and hardware. This internal networking provides a valuable overview of the diverse projects that are progressing simultaneously at emergenCTIY.

Among the exhibits were well-known flagships of emergenCITY research: the rescue robot Scout, a revised version of the serious game Krisopolis and the Nexus demonstrator from the German Aerospace Center (DLR) in an enhanced version. Using a model of the advertising pillar 4.0, emergenCITY scientiest Joachim Schulze explained the current development status of the first prototype for the pillar with an information display. emergenCITY will be setting up this prototype in Darmstadt in cooperation with the advertising marketer Ströer.

Frank Hessel’s team also presented the sensor boxes planned to be installed in the Lichtenberg district - including a new sensor that can classify ambient noise. It was developed by Hicham Bellafkir and Markus Vogelbacher. Urban Sound Classification is the name of the program, which recognizes and classifies more than 70 public ambient noises, ranging from dogs barking to music and sirens.

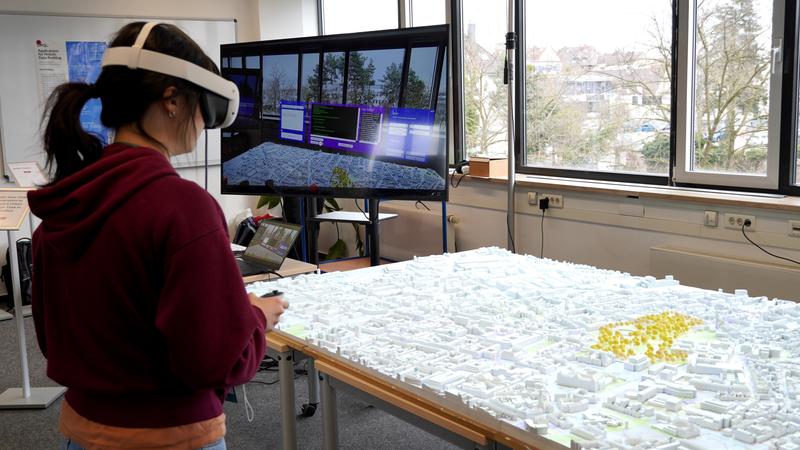

There were more examples where new developments were integrated into already existing demonstrators in the Lab. Yanni Mei’s Real-time Code Generation is based on the emergenCITY city model of Darmstadt. In an augmented reality (AR) version of the model, the researcher is developing a software that city planners could use to locate existing buildings in Darmstadt and design new ones virtually. Users can observe effects of these changes, e.g. on traffic flow, live on the animated city model City MoViE.

Using voice input and artificial intelligence, Yanni Mei has developed a program that converts a spoken command in an augmented reality interface directly into a ChatGPT prompt, which then generates the corresponding code to realize the command on the model. If one says: “Locate the hospital!”, a red circle appears around the Darmstadt hospital in the virtual city model after just a few seconds. The aim is to enable city planners without in-depth programming knowledge to get creative in the augmented reality environment of the model.